Software Development

Software Development

(In a small-scale research context)

It is not hard to argue that the creation of software is the greatest human cultural achievement to date. But writing software well is not easy and much sophisticated methodology has been proposed for software development, primarily addressing the needs of large software companies and enterprise-scale systems. Certainly: once software development becomes the task of teams, and systems become larger than what one person can remember confidently, failure is virtually guaranteed if the task can't be organized in a structured way.

But our work often does not fit this paradigm, because in the bioinformatics lab the requirements change quickly. The reason is obvious: most of what we produce in science are one-off solutions. Once one analysis runs, we publish the results, and we move on. There is limited value in doing an analysis over and over again. However, this does not mean we can't profit from applying the basic principles of good development principles. Fortunately that is easy. There actually is only one principle.

Make implicit knowledge explicit.

Everything else follows.

Contents

Collaborate

Making project goals explicit and making progress explicit are crucial, so that everyone knows what's going on and what their responsibilities are. Collaboration these days is distributed, and online. Here is a list of options you ought to have tried. :

- Schedule regular face-to-face meetings. If you can't be in the same room, Google hangout's may work (up to ten people). Old-time developers often use IRC chat rooms.

- A wiki such as our Student Wiki is obviously a good way to structure, share and collaboratively edit information.

- Software sharing and code collaboration is best managed through a github repository. As a version control system, it is an essential part of all software development.

- Dynamic coauthoring capabilities are offered by Etherpad installations such as the one hosted by WikiMedia.

- You have probably collaborated with Google Drive text, spreadsheet or presentation documents before. These have superseded many other online offerings.

- A robust system for shared file storage is Dropbox.

- Trello appears to be a nice tool to distribute work-packages and keep up to date with discussions, especially if your "team" is distributed.

- I like Kanbanery as a structured To-Do list for my own time-management, but it can also be adapted to project workflows.

- There is a lot of turnover with all of these tools and it's important to backup your important data to alternate locations. Offerings may go out of date, or get absorbed by other services. Are there collaboration tools you like that I haven't mentioned? Let me know ...

Plan

The planning stage involves defining the goals and endpoints of the project. We usually start out with a vague idea of something we would like to achieve. We need to define:

- where we are;

- where we want to be;

- and how we will get there.

For an example of a plan, refer to the 2016 BCB420 Class Project. There, we lay out a plan in three phases: Preparation, Implementation and Results. This is generic, the preparation phase implies an analysis of the problem, which focusses on what will be accomplished, independent of how this will be done. The results of the analysis can be a requirements document (here is a link to the ABP Requirements template - a template to jumpstart such a document) or a less formal collection of goals.

The most important achievement of the plan is to break down the project into manageable parts and define the Milestones that characterize the completion of each part.

Design

design encompasses a range of activities: conceptualizing the system, defining requirements, structuring components and interfaces, and providing roadmaps for deployment and maintenance. All these are necessary, but none of these matter if you are solving the wrong problem.

In the problems we deal with, it is a good idea to folllow an architecture centric design process: we explore the requirements with the specific goal of drafting an overall architecture, and we draw up a detailed architecture to make the components and their interfaces explicit. Typically this will involve some modelling and there are different ways to model a system.

- Structural modelling describes the components and interfaces. The components are typically pieces of software, the interfaces are "contracts" that describe how information passes from one piece to another. Structural models include the Data model that captures how data reflects reality and how reality changes the data in our system;

- Behaviour modelling describes the state changes of our system, how it responds to input and how data flows through the system. In data-driven analysis, a data flow diagram may capture most of what is important about the system.

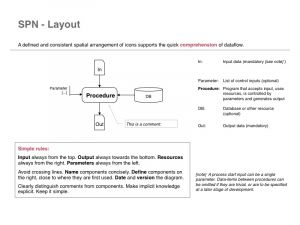

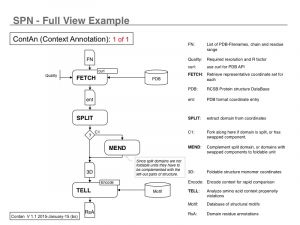

- (SPN - Structured Process Notation) is a data flow diagram method that I find particularly suited to the kind of code that we often write in bioinformatics: integration of data, transformation, and analytics, all passing through well defined intermediate stages. Read more about it here.

Whichever modelling method is adopted, make sure that it is explicit. Many aspects of the widely used UML standard suffer from poor information-design; this can lead to hard-to-understand graphics, that obfuscate rather than clarify the issues[1]. That, of course, is cargo cult design.

The design objectives have to be clearly articulated. There are many ways to achieve the same end result, but good design will support writing systems that are correct, robust, extensible and maintainable. A very good starting point for the design is a proper separation of concerns.

- More reading

Develop

In the development phase, we actually build our system. It is a misunderstanding if you believe most time will be spent in this phase. Designing a system well is hard. Building it, if it is well designed, is easy. Building it if it is poorly designed is probably impossible.

A number of development methodologies and philosophies have been proposed, and they go in and out of fashion. In this course we will work with a conjunction of TDD (Test Driven Development) and Literate programming.

Literate Programming

Literate programming is an idea that software is best described in a natural language, focussing on the logic of the program, i.e. the why of code, not the what. The goal is to ensure that model, code, and documentation become a single unit, and that all this information is stored in one and only one location. The product should be consistent between its described goals and its implementation, seamless in capturing the process from start (data input) to end (visualization, interpretation), and reversible (between analysis, design and implementation).

In literate programming, narrative and computer code are kept in the same file. This source document is typically written in Markdown or LaTeX syntax and includes the programming code as well as text annotations, tables, formulas etc. The supporting software can weave human-readable documentation from this, or tangle executable code. Literate programming with both Markdown and LaTex is supported by R Studio and this makes the R Studio interface a useful development environment for this paradigm. While it is easy to edit source files with a different editor and process files in base R after loading the Sweave() and Stangle() functions or the knitr package. In our context here we will use R Studio because it conveniently integrates the functionality we need.

For exercises on knitr, RMarkdown and LaTex, follow this link.

Test Driven Development

TDD is meant to ensure that code actually does what it is meant to do. In practice, we define our software goals and devise a test (or battery of tests) for each. Initially, all tests fail. As we develop, the tests succeed. As we continue development

- we think carefully about how to break the project into components and structure them (units). These more or less do one, thing, one thing only, and don't have side-effects;

- we discipline ourselves to watch out for unexpected input, edge- and corner cases and unwarranted assumptions;

- we can be confident that later changes do not break what we have done earlier - because our tests keep track of the behaviour.

For an exercise in Test Driven Development, follow this link.

Typically testing is done at several levels:

- During the initial development phases unit testing continuously checks the function of the software units of the system.

- As the code base progresses, code units are integrated and begin interacting via their interfaces - we begin integration testing. Interfaces can be specified as "contracts" that define the conditions and obligations of an interaction. Typically, a contract will define the precondition, postcondition and invariants of an interaction. Focussing on these aspects of system behaviour is also called design by contract. The primary task of integration testing is to verify that contracts are accurately and completely fulfilled.

- Finally validation tests verify the code, and validate its correct execution - just like a positive control in a lab experiment.

- However: Code may be correct, but still unusable in practice. Without performance testing the development cannot be considered to be complete.

Testing supports maintenance. When you find a bug, write a test that fails because of the bug, then fix the bug, and with great satisfaction watch your test pass. Also, be mindful that you may have made the same type of error elsewhere in your code. Search for these cases, write tests and fix them too.

That said, one of the strongest points of TDD is that it supports refactoring! Work in a cycle: Write tests → Develop → Refactor. This allows you to get something working quickly, then adopting more elegant / efficient / maintainable solutions that do not break functionality.

Version Control

Must.

Fail Safe or Fail Fast?

Testing for correct input is a crucial task for every function, and R especially goes out of its way to coerce input to the type that is needed. This is making functions fail safe. Do consider the opposite philosophy however: "fail fast", to produce fragile code. You must test whether input is correct, but a good argument can be made that incorrect input should not be fixed, but the function should stop the program and complain loudly and explicitly about what went wrong. I have seen too much code that just stops executing. It is inexcusable for a developer not to take the time to write the very few statements that are needed for the user to understand what was expected, and what happened instead. This - once again - makes implicit knowledge explicit, it helps the caller of the function to understand how to pass correct input, and it prevents code from executing on wrong assumptions. In fact, failing fast may be the real fail safe.

Code

Here is a small list of miscellaneous best-practice items for the phase when actual code is being written:

- Be organized. Keep your files in well-named folders and give your file names some thought.

- Use version control: git or svn.

- Use an IDE (Integrated Development Environment). Syntax highlighting and code autocompletion are nice, but good support for debugging, especially stepping through code and examining variables, setting breakpoints and conditional breakpoints are essential for development. For Rdevelopment, the R Studio environment provides syntax highlighting and a symbolic debugger.

- Design your code to be easily extensible and only loosely coupled. Your requirements will change frequently, make sure your code is modular and nimble to change with your requirements.

- Design reusable code. This may include standardized interface conventions and separating options and operands well.

- DRY (Don't repeat yourself): create functions or subroutines for tasks that need to be repeated. Or, seen from the other way around: whenever you find yourself repeating code, it's time to delegate that to a function instead. Incidentally, that also holds for database design: whenever you find yourself intersecting tables with similar patterns, perhaps it's a good idea to generalize one of the tables and merge the data from the other into it.

- KISS (Keep it simple): resist the temptation for particularly "elegant" language idioms and terse code.

- Comment your code. I can't repeat that often enough. Code is read very much more often than it is written. Unfortunately (for you) the one most likely to have to read and understand your convoluted code is you yourself, half a year later. So do yourself the favour to explain what you are thinking. Don't comment on what the code does - that is readable from the code itself - but why you do something the way you do.

- Be consistent.

Keeping Code organized

I have probably mentioned this several time over, but we don't develop with text-editors, and we don't code on the command line, even though we could. In order to keep code organized, there are several options (I'm writing this from an R perspective, but you can extrapolate.):

- Command line

- Quick to try out simple ideas and syntax. Commands are stored in "history" and you can navigate through previous commands with the up-arrow and down-arrow key. Major drawback: this is a volatile way to structure your work and you daon't have a proper record of what you are doing.

- Script files

- Probably the most general approach. Keep everything in one file. This makes it easy to edit, version, maintain and share code. Most importantly, you have a record of what you were doing (Reproducible Research!). When you write a paper, have a script file for every figure and make sure it runs from start to end when you

source()it. You'll be amazed how often this saves your sanity.

- R markdown

- Literate programming. We've mentioned it above.

- Shiny apps

- Shiny is a way to build dynamic Web pages that execute embedded R code. See here for more information.

- Jupyter notebooks

- Project Jupyter is a platform to embed code, plots and documentation. It has great advantages and a large following. Be sure to understand what it does. Not everyone agrees with the hype though read here about some of the downsides. The fact that you can't execute single lines but need to work through code chunk by chunk breaks it for me. This is why I am moving towards:

- RStudio projects.

- We use RStudio anyway, since it's a pretty good IDE for R. With projects, we can package code and data into a self contained bundle. Code is available as source and we can edit and execute at will. Most importantly, we can put it all under version control, locally and on github. I think this is the perfect combination.

- R notebooks.

- Recent addition to RStudio capabilities, they work like Jupyter notebooks.

RStudio Projects

Task:

- In this task you set up a github repository for an R project. You create the project on your local machine, write some code and customize it. Then you upload your local project to your github repository.

- Navigate to your github account (create an account if you don't have one yet.)

- Click on New Repository on your profile page in the "Your repositories" section

- Give the repository a useful, stable name for a project - "sample_project" or "test_project" would be appropriate for this task.

- Check Initialize this repository with a README

- ... and click Create repository.

- Open RStudio

- Click on File → New Project ...

- Select Version Control

- Select Git

- Paste the Repository URL of your Github project ...

- Use the github repository name as your Project directory name and Browse... to select a good folder where the project folder can live on your computer.

- Click Create Project.

- Click File → New File → R Script

- Write some code: for example, write a function that returns n random passwords of length l (where n and l are parameters). l is the number of syllables to use. A syllable is defined as a consonant or consonant cluster (onset) and a vowel or diphthong (nucleus).

- Save the script file in your project directory. Make sure that it has the extension .R (RStudio should have added the extension by default.)

- Set your working directory: use Session → Set Working Directory → To Project Directory

- Use

rm(list = ls())to clear your workspace. - Open Tools → Project Options... and set the following options:

- in the General pane:

- Restore most recetly opened project ... No

- Restore previously open source documents ... Yes

- Restore .RData ... No

- Save workspace ... No

- Always save history ... No

This will ensure you start the project with a clean slate and the same environment every time. If you do not do this, your project may not be fully reproducible code that can be shared among many people.

- In the Code editing pane:

- Check all options ON

- Set Tab width to 3 spaces

- Make sure Text-encoding is UTF-8

All other options are probably oK in their default state - packrat should be OFF (but you may turn it ON if you need it at some point and you understand what it does.)

- Click OK.

- Now open a new text file (not R script), add a single blank line and save this in your project directory under the name .Rprofile. R uses this file to customize a working session. Right now there is nothing in the file but you can always put something into the file later.

- Close the .RProfile pane, only your R script should be open. This window will be restored when you open your project and you don't want .Rprofile to be opened every time you start the project.

- Quit RStudio.

- Open the project folder and delete the .Rhistory file (there is probably one there from when the project was originally created.)

- Now restart R Studio and click File → Open Project in a New Window.... Everything should be there:

- Your workspace should be empty

- Your working directory should be the project folder. Test this with getwd()

- Your source file should be open to use and develop further.

One thing left to do: you haven't synchronized your project on Github yet.

- Open the version control interface with Tools → Version Control & Commit ...

- Click on each files' check-box to "stage" it, in turn, write a meaningful commit message, and click on Commit. Close the log windows.

- Finally: click on Push to upload all changes to your github repository.

- In your browser, navigate back to your github repository and verify that all changes have arrived.

Your project setup is now complete.

To review the ideas we covered here, look up the following two R Studio support pages:

Coding style

It should always be your goal to code as clearly and explicitly as possible. R has many complex idioms, and it being a functional language that can generally insert functions anywhere into expressions, it is possible to write very terse, expressive code. Don't do it. Pace yourself, and make sure your reader can follow your flow of thought. More often than not the poor soul who will be confused by a particularly "witty" use of the language will be you, yourself, half a year later. There is an astute observation by Brian Kernighan that applies completely:

- "Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it."

Over time it has turned out that teaching proper coding style (and thus also a proper approach to thinking about code) warrants its own page: follow this link and study the page well. Will this be on the exam? Yes.

Code Reviews

Regularly meet to review other team member's code. Keep the meetings short, and restrict to no more than 300 lines at a time.

- The code author walks everyone through the code and explains the architecture and the design decisions. It should not be necessary to explain what the code does - if it is necessary, that points to opportunities for improvement.

- Briefly consider improvements to coding style as suggestions but don't spend too much time on them (don't create a "Bicycle Shed" anti-pattern) - style is not the most important thing about the review. Be constructive and nice - you should encourage your colleagues, not demotivate them.

- Spend most of the time discussing architecture: how does this code fit into the general lay of the land? How would it need to change if its context changes? Is it sufficiently modular to survive? What does it depend on? What depends on it? Does it apply a particular design pattern? Should it? Or has it devolved into an anti-pattern?

- Focus on tests. What is the most dangerous error for the system integrity that the code under review could produce. Are there tests that validate how the code deals with this? Are there tests for the edge cases and corner cases[2]? This is the best part about the review: bring everyone in the room on board of the real objectives of the project, by considering how one component contributes to it.

- Finally, what's your gut feeling about the code: is there Code Smell? Are there suboptimal design decisions that perhaps don't seem very critical at the moment but that could later turn into inappropriate technical debt? Perhaps some refactoring is indicated; solving the same problem again often leads to vastly improved strategies.

Overall be mindful that code review is a sensitive social issue, and that the primary objective is not to point out errors, but to improve the entire team.

QA

The importance of explicit, structured, proactive QA (Quality Assurance) is all too often not sufficiently appreciated. In my experience this is the single most important reason for projects that ultimately fail to live up to their expectations.

- QA needs to keep track of assets that need to be created.

- Is the plan complete or are there gaps?

- Do all components and critical steps have clearly defined milestones?

- Is there a timeline?

- Is the project on track?

- QA needs to keep track of assets that exist.

- Has the design been documented to acceptable standards?

- Does the design address the requirements?

- Have the interfaces between modules been specified?

- Is the code written to acceptable standards, is it sufficiently commented, properly modularized?

- Have code reviews been organized?

- Is the code correct? Have test cases been designed (unit tests, integration tests) and has the code been tested? Do runs with true- and false- positives give the expected results? Do comparisons against benchmarks achieve results within acceptable tolerance?

- And for both -

- Is the project being documented?

Adding QA ad hoc, as an afterthought is a bad idea. QA makes a project great when coordinated by a capable individual, who catalyzes the whole team to do their best in an uncompromising dedication to excellence.

Deploy and Maintain

In our context, deployment may mean a single run of discovery and maintenance may be superfluous as the research agenda moves on.

But this does not mean we should ignore best practice in scientific software development: simple, but essential aspects like using version control for our code, using IDEs, writing test cases for all code functions etc. These aspects are very well covered in the open source Software Carpentry project and courses. Free, online, accessible and to the point. Go there and learn:

Notes

- ↑ I would include any kind of overly decorated relationship indicator in this critique, especially in UML association diagrams, or in Crow's foot notation. Symbols need to be iconic, focus on the essence of the message, and resist any decorative fluff.

- ↑ A software engineer walks into a bar and orders a beer. Then he orders 0 beers. Then orders 2147483648 beers. Then orders a duck. Then orders -1 beers, poured into a bathtub...

Further reading and resources

- Practice

- Concepts

Greg Wilson et al. on the excellent principles of the Software Carpentry workshops:

| Wilson et al. (2014) Best practices for scientific computing. PLoS Biol 12:e1001745. (pmid: 24415924) |

- Software design

- Software pattern

- Software development process

- Software architecture

- Portal:Software testing

- Kim Waldén and Jean-Marc Nerson: Seamless Object-Oriented Software Architecture: Analysis and Design of Reliable Systems, Prentice Hall, 1995.

| Sandve et al. (2013) Ten simple rules for reproducible computational research. PLoS Comput Biol 9:e1003285. (pmid: 24204232) |

Article in Nature Biotechnology; note that successful here is meant to imply widely used. David Baker's Rosetta package is not mentioned, for example. Nevertheless: good insights in this.

| Altschul et al. (2013) The anatomy of successful computational biology software. Nat Biotechnol 31:894-7. (pmid: 24104757) |

| Peng (2011) Reproducible research in computational science. Science 334:1226-7. (pmid: 22144613) |

|

[ PubMed ] [ DOI ] Computational science has led to exciting new developments, but the nature of the work has exposed limitations in our ability to evaluate published findings. Reproducibility has the potential to serve as a minimum standard for judging scientific claims when full independent replication of a study is not possible. |

- Miscellaneous